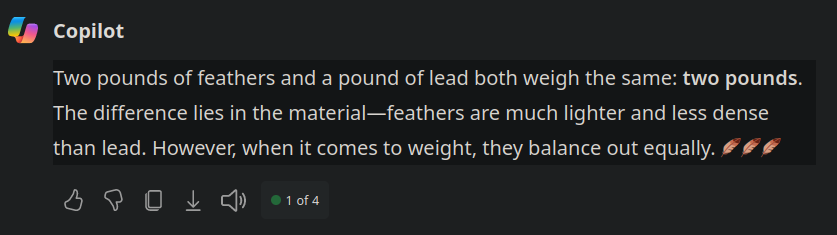

I love that example. Microsoft’s Copilot (based on GTP-4) immediately doesn’t disappoint:

It’s annoying that for many things, like basic programming tasks, it manages to generate reasonable output that is good enough to goat people into trusting it, yet hallucinates very obviously wrong stuff or follows completely insane approaches on anything off the beaten path. Every other day, I have to spend an hour to justify to a coworker why I wrote code this way when the AI has given him another “great” suggestion, like opening a hidden window with an UI control to query a database instead of going through our ORM.

After reading, the gist of it seems to be:

.

In short, just another out of touch entrepreneur who sells snake oil cures to people suffering in the current system, so that they may invite in the boot that stomps them down for good.