Self-driving cars are often marketed as safer than human drivers, but new data suggests that may not always be the case.

Citing data from the National Highway Traffic Safety Administration (NHTSA), Electrek reports that Tesla disclosed five new crashes involving its robotaxi fleet in Austin. The new data raises concerns about how safe Tesla’s systems really are compared to the average driver.

The incidents included a collision with a fixed object at 17 miles per hour, a crash with a bus while the Tesla vehicle was stopped, a crash with a truck at four miles per hour, and two cases where Tesla vehicles backed into fixed objects at low speeds.

It’s important to draw the line between what Tesla is trying to do and what Waymo is actually doing. Tesla has a 4x higher rate, but Waymo has a lower rate.

Because Waymo uses more humans?

Because Waymo doesn’t try and do FSD with only cameras.

Wow, thank goodness nobody gutted the authority in charge of making sure that wouldn’t happen…

The AI companies put out a presser a few years back that said “Um, aktuly, its the humans who are bad drivers” and everyone ate that shit up with a spoon.

So now you’ve got Waymos blowing through red lights and getting stuck on train tracks, because “fuck you fuck you stop fighting the innovation we’re creatively disruptive we do what we want”.

That doesn’t mean that waymo is more error prone than human drivers.

Humans are awful at driving and do stuff like stop on train tracks and blow through red lights all the time.

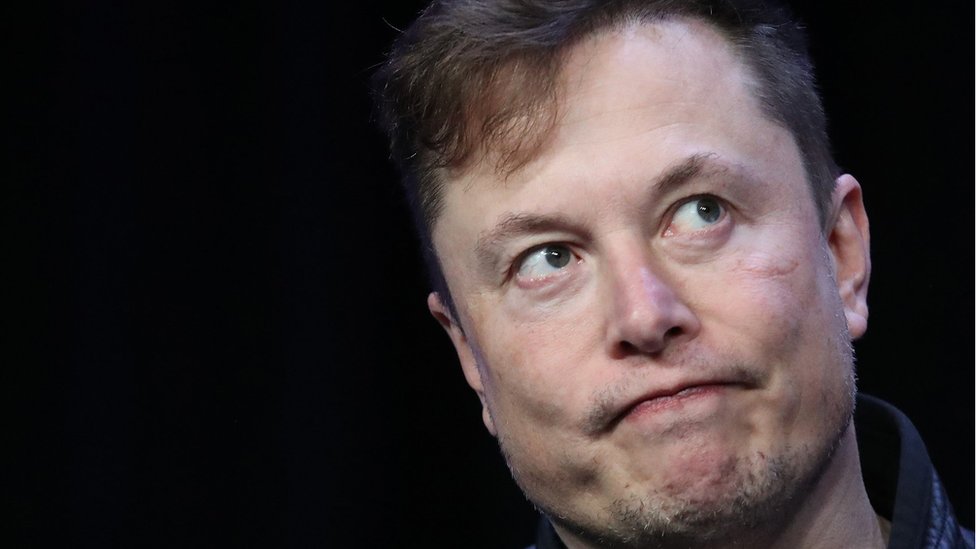

Musk = POS Nazi.

POS Nazi *pedophile

I do (sarcastically) love knowing Leave the World Behind is a documentary.

Thanks Obama.

Even for the first piss poor epigone of Neuromancer, the name “Robotaxi” would’ve been laughed at.

Mulon Esk made the dumbest name happen for the xth time.

Use lidar you ketamine saturated motherfucker

But then he would have to admit being wrong for removing radar…

Can’t do that. Then he would have to upgrade all legacy cars. And he is missing the lidar dataset.

The best time to add lidar would have been years ago, the second best time is right now. I don’t think he would have to update the old cars, it could just be part of the hardware V5 package. He’s obviously comfortable with having customers beta testing production vehicles so he can start creating a lidar set now or he can continue failing to make reliable self-driving cars.

Agree, but since he stated multiple time that all cars since xxx years were hardware capable of L5 self-driving next year (no need to precise the year, the statement is repeated every year), adding LIDAR now would be opening the way to a major class action. So he painted himself in a corner, and like all gigantic-ego idiots, he doubles down every time he’s asked.

I agree with you. Musk’s ego doesn’t.

Optical recognition is inferior and this is not surprising.

Bro, anybody who has watched a Predator movie knows this is fact.

Just how much K do you need to take to argue this?

Yeah that’s well known by now. However, safety through additional radar sensors costs money and they can’t have that.

Nah, that one’s on Elon just being a stubborn bitch and thinking he knows better than everybody else (as usual).

Well I mean if you believe that it is possible in a safe way it’s the one thing that Tesla’s got going for it compared to Waymo which is way ahead of them. Personally I don’t but I can see the sunk cost.

He’s right in that if current AI models were genuinely intelligent in the way humans are then cameras would be enough to achieve at least human level driving skills. The problem of course is that AI models are not nearly at that level yet

Also the Human brain is still on par with some of the worlds best supercomputers, I doubt a Tesla has that much onboard processing power.

Good point. Though I’ve heard some of these self driving cars connect remotely to a person to help drive when the AI doesnt know what to do, so I guess it’s conceivable that the car could connect to the cloud. That would be super error prone though. Connectivity issues cloud brick your car.

Even if they were, would it not be better to give the car better senses?

Humans don’t have LIDAR because we can’t just hook something into a human’s brain and have it work. If you can do that with a self-driving car, why cut it down to human senses?

Exactly, with this logic why have motors or wheels?

You don’t have wheels so you shouldn’t use cars

I agree it would be better. I’m just saying that in theory cameras are all that would be required to achieve human level performance, so long as the AI was capable enough

Except if I get something to replace me, it better do better than me, not just just as good. So I would expect better sensors.

Except humans have self cleaning lenses. Cars don’t.

“So long as the AI has the same intelligence as a human brain” is a pretty big assumption. That assumption is in sci-fi territory.

Yeah thats my point

I am a Human and there were occasions where I couldn’t tell if it’s an obstacle on the road or a weird shadow…

Yes. In theory cameras should be enough to get you up to human level driving competence but even that is a low bar.

I feel like camera only could theoretically pass human performance, but that hinges entirely on AI models that do not currently exist, and that those models, when they do exist, being capable of running inside of a damn car.

At that point, it’d be cheaper to just add LiDAR…

Cameras are inferior to human vision in many ways. Especially the ones used on Teslas.

Lower dynamic range for one.

Genuinely asking how so?

Are tesla cameras even binocular?

just one more AI model, please, that’ll do it, just one more, just you wait, have you seen how fast things are improving? Just one more. Common, just one more…

I NEED ONE MORE FACKIN’ AI MODEL!!

I’m not too sure it’s about cost, it seems to be about Elon not wanting to admit he was wrong, as he made a big point of lidar being useless

I don’t think it’s necessarily about cost. They were removing sensors both before costs rose and supply became more limited with things like the tariffs.

Too many sensors also causes issues, adding more is not an easy fix. Sensor Fusion is a notoriously difficult part of robotics. It can help with edge cases and verification, but it can also exacerbate issues. Sensors will report different things at some point. Which one gets priority? Is a sensor failing or reporting inaccurate data? How do you determine what is inaccurate if the data is still within normal tolerances?

More on topic though… My question is why is the robotaxi accident rate different from the regular FSD rate? Ostensibly they should be nearly identical.

Regular FSD rate has the driver (you) monitoring the car so there will be less accidents IF you properly stay attentive as you’re supposed to be.

The FSD rides with a saftey monitor (passenger seat) had a button to stop the ride.

The driverless and no monitor cars have nothing.

So you get more accidents as you remove that supervision.

Edit: this would be on the same software versions… it will obviously get better to some extent, so comparing old versions to new versions really only tells us its getting better or worse in relation to the past rates, but in all 3 scenarios there should still be different rates of accidents on the same software.

The unsupervised cars are very unlikely to be involved in these crashes yet because according to Robotaxi tracker there was only a single one of those operational and only for the final week of January.

As you suggest there’s a difference in how much the monitor can really do about FSD misbehaving compared to a driver in the driver’s seat though. On the other hand they’re still forced to have the monitor behind the wheel in California so you wouldn’t expect a difference in accident rate based on that there, would be interesting to compare.

There are multiple unsupervised cars around now, it was only the 1 before earnings call (that went away), then a few days after earnings they came back and weren’t followed by chase cars. There’s a handful of videos over many days out there now if you want to watch any. The latest gaffe video I’ve seen is from last week where it drove into (edit: road closed) construction zone that wasn’t blocked off.

I would still expect a difference between California and people like you and me using it.

My understanding is that in California, they’ve been told not to intervene unless necessary, but when someone like us is behind the steering wheel what we consider necessary is going to be different than what they’ve been told to consider necessary.

So we would likely intervene much sooner than the saftey driver in California, which would mean we were letting the car get into less situations we perceive to be dicey.

Yeah I seen that video and another where they went back and forth for an hour in a single unsupervised Tesla. One thing to note is that they are all geofenced to a single extremely limited route that spans about a 20 minute drive along Riverside Dr and S Lamar Blvd with the ability to drive on short sections of some of the crossing streets there, that’s it.

They’ll work perfectly as soon as AI space data center robots go to Mars. I’d say a Robovan will be able to tow a roadster from New York to Hong Kong by… probably July. July or November at the latest.

I really fucking hate how his fans can just listen to him lie like this over and over and it doesn’t affect their opinion of him. I remember falling for it a couple times before I started asking “Is this like the last time you promised dates?”

By that time it was a moot point, however, because that “pedo guy” comment was just around the corner. Now anyone who likes him after that needs to go to therapy to figure out a few things.

I won’t comment on people who support him after the other things.

I will! They’re fucking stupid sheep

a crash with a bus while the Tesla vehicle was stopped

Uuh…wouldn’t that be the fault of the bus? I mean, the system is faulty as fuck so there’s really no need to mix in shit like this, it reduces legitimacy of the otherwise very valid criticism.

That depends entirely where the Tesla stopped, and under what conditions.

I’m betting it stopped in the path of it. Either by pulling out in front of it, or sitting on the inside of the truck whilst turning.

Eh, not really though. Generally if your car is stopped, even in the middle of the road, you are not at fault if someone else hits you. You can still get fined for obstruction of traffic, but the incident is entirely the fault of the moving vehicle.

If you stop in the middle of a highway you absolutely are at fault.

Tesla robotaxis don’t go anywhere near highways currently.

Entirely possible, but all incidents are counted as it would probably be difficult to produce reliable stats where you’re leaving out some based on some kind of an assessment of blame.

Because Tesla hides most of the details unlike the competition we can’t really look at a specific one and know.

This is a really funny thing to see a few scrolls down from an article about Tesla’s first drivingwheelless vehicle and finally “solving autonomous driving”

Who insures these things?

Tesla

Are they even insured like typical insurance?

If Tesla owns it, don’t they just pay out of pocket as needed, they don’t actually have a monthly payment to themselves or anything?

There’s no way, then why can’t I drive around “uninsured” with the promise I’ll pay out of my pocket for any damage.

I’m not sure if this is available everywhere, but in California you can put up a bond in lieu of insurance with the DMV, either with your own money or with a surety bond company.

So you can do it, they just require proof in the form of the bond that the money is available when needed. They won’t just take your word for it. They might take the word of a company with a $1.3 trillion market cap, which is probably a bad idea. You get hit with one of these fuckers and instead of the admittedly shitty but known process of dealing with an insurance company, now you have to deal with a huge company that doesn’t want to admit their dumb camera only system is at fault.

This is what I was thinking was possible ya. If they have enough money, they could just cover it themselves.

I really don’t know if that can be done everywhere though.

And ya, as an individual self insuring this way, it would be a disaster going against a behemoth like Tesla.

Companies routinely purchase insurance against their own liabilities.

What auto insurance company would insure an unproven tech like this at a reasonable rate?

If someones willing to insure it, it must cost an arm and a leg at least at this point in time in the cycle?

They’re 4 times as capable of crashing as a human driver. How efficient!

Whaaa how do you do subscript (?) text! Aaaaah!

Tildes: ~subscript~ ~text~ = subscript text.

Oh, you sexy escapist!

Nice! Thanks!

And newsom doesn’t give a shit

What does this have to do with Newsom?

What does this have to do with Newsom? Tesla isn’t allowed to operate this way in California, the accidents are from the Texas data.

A parallel effect, without setting a better example and forcing unsafe competition just make crazy be more crazy.

not sure what you’re getting at but I think this makes it seem like California regulators are doing their job since they’ve not let Tesla test without someone being behind the wheel yet while Texas is completely derelict of their duty, even allowing them unsupervised despite these terrible stats.